Tests for the univ() function - Normal Distribution

Ivan Jacob Agaloos Pesigan

2020-07-17

Source:vignettes/tests/tests_univ_norm.Rmd

tests_univ_norm.RmdUnivariate Data Generation - Normal Distribution

Sample Size and Monte Carlo replications

| Variable | Description | Notation | Values |

|---|---|---|---|

n |

Sample size. | \(n\) | 1000 |

R |

Monte Carlo replications. | \(R\) | 1000 |

Population Parameters

| Variable | Description | Notation | Values |

|---|---|---|---|

mu |

Population mean. | \(\mu\) | 0 |

sigma2 |

Population variance. | \(\sigma^2\) | 1 |

sigma |

Population standard deviation. | \(\sigma\) | 1 |

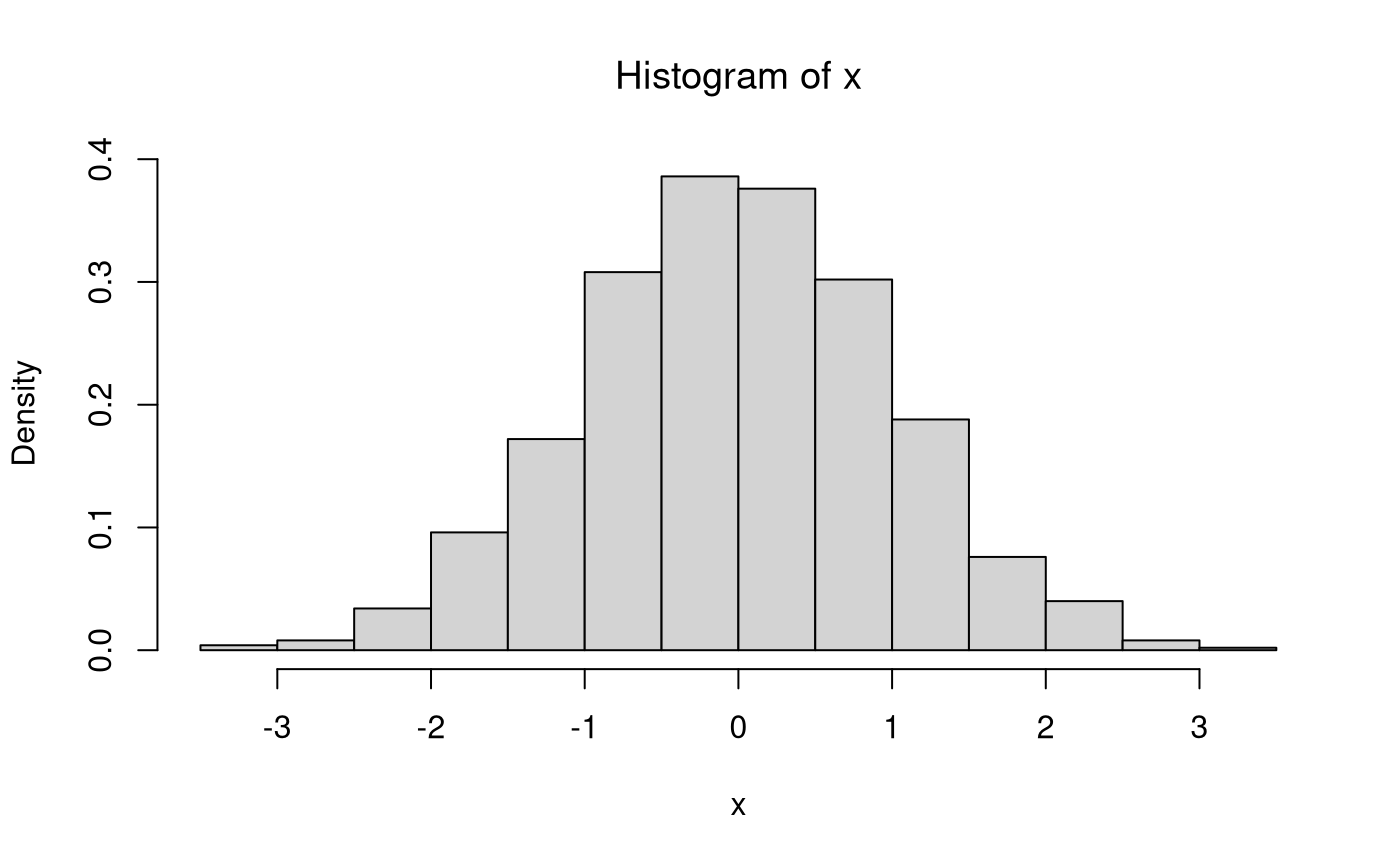

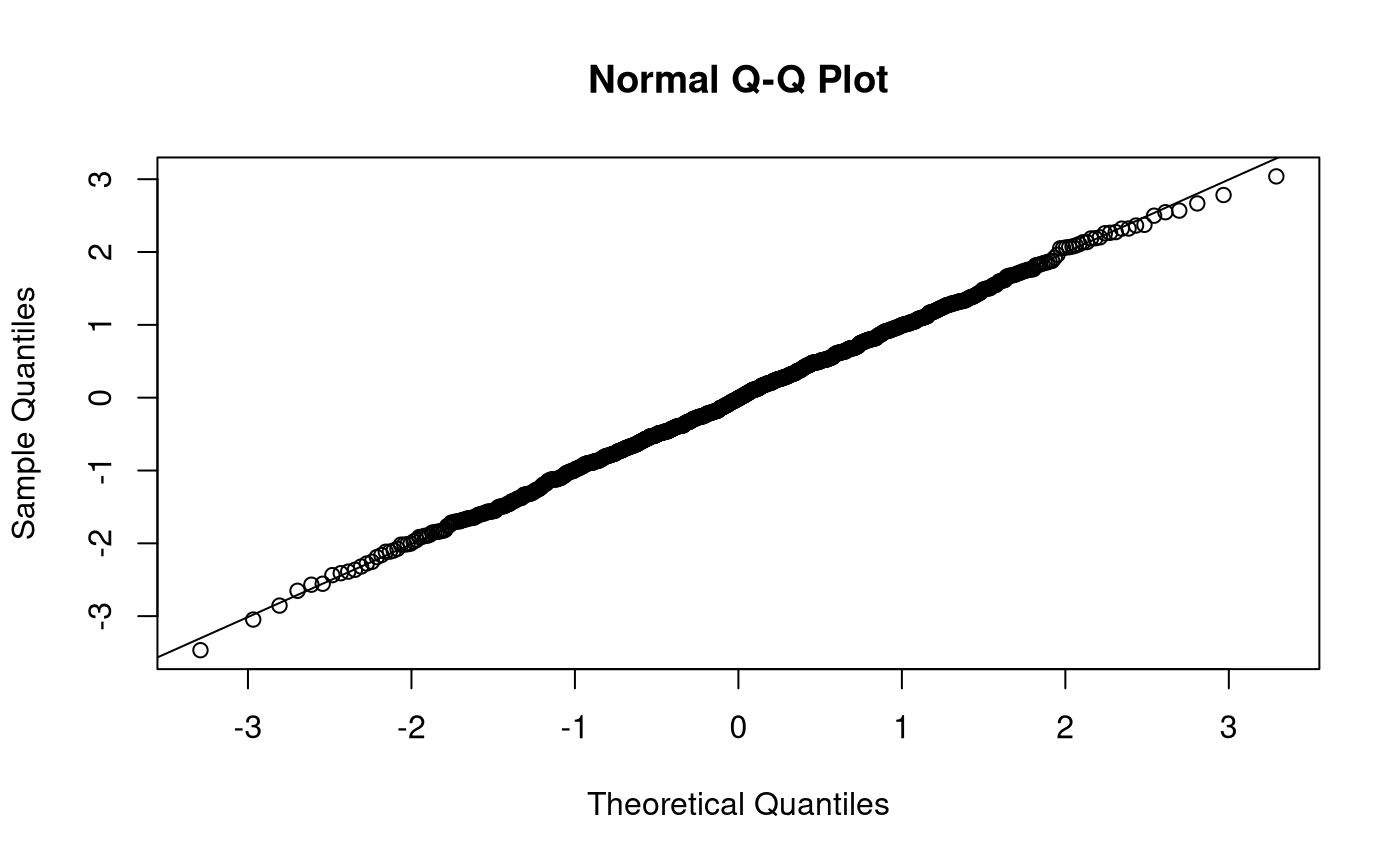

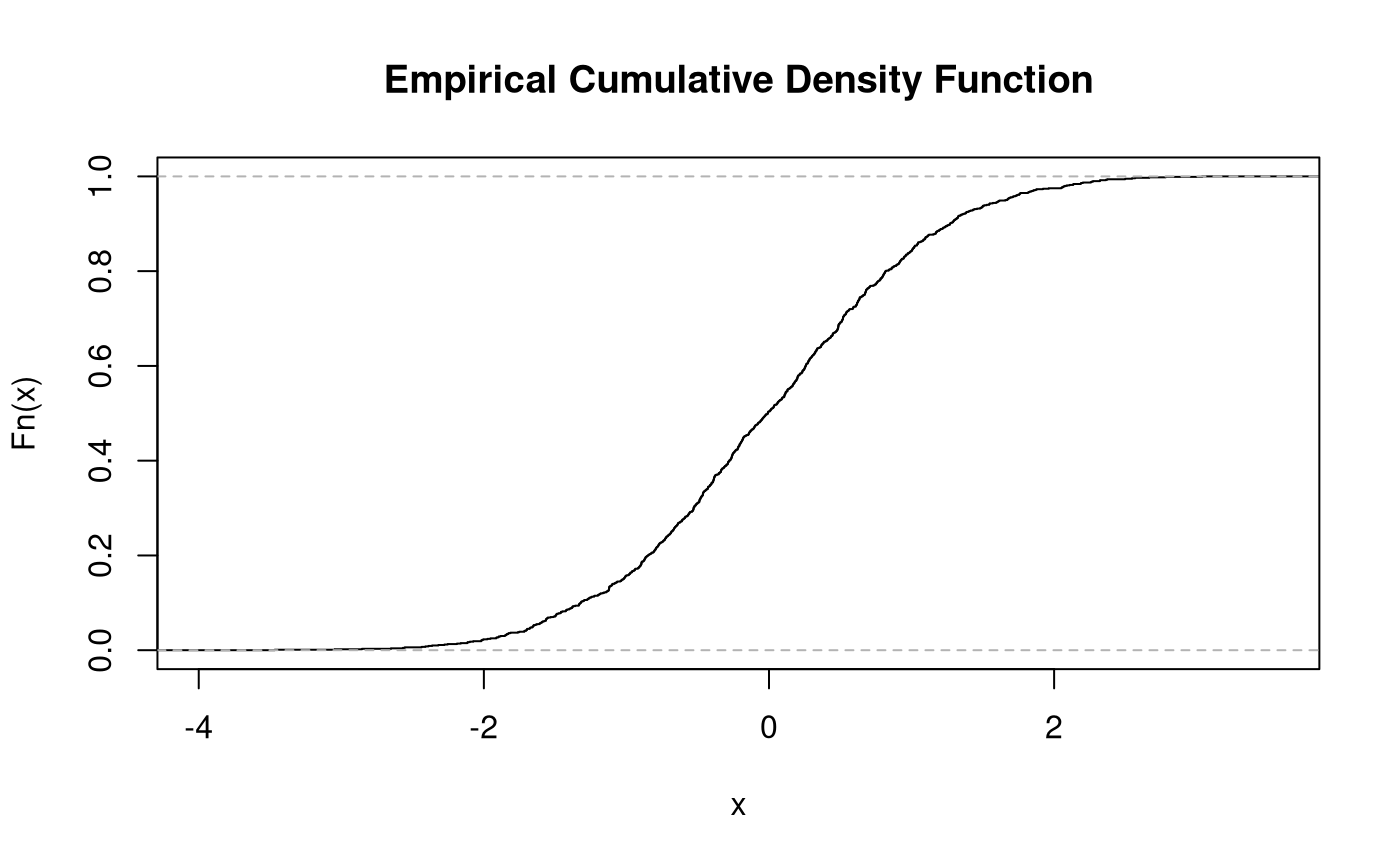

Sample Data

\[\begin{equation} X \sim \mathcal{N} \left( \mu = 0, \sigma^2 = 1 \right) %(\#eq:dist-norm-X-r) \end{equation}\]

The random variable \(X\) takes on the value \(x\) for each observation \(\omega \in \Omega\).

- \(\omega\) refers to units or observations

- \(\Omega\) refers to the collection of all units, that is, a set of possible outcomes or the sample space contained in the set \(S\).

A random variable acts as a function, inasmuch as, it maps each observation \(\omega \in \Omega\) to a value \(x\).

\[\begin{equation} x = X \left( \omega \right) %(\#eq:dist-random-variable-1) \end{equation}\]

\[\begin{equation} \mathbf{x} = \begin{bmatrix} x_1 = X \left( \omega_1 \right) \\ x_2 = X \left( \omega_2 \right) \\ x_3 = X \left( \omega_3 \right) \\ x_i = X \left( \omega_i \right) \\ \vdots \\ x_n = X \left( \omega_n \right) \end{bmatrix}, \\ i = \left\{ 1, 2, 3, \dots, n \right\} %(\#eq:dist-random-variable-2) \end{equation}\]

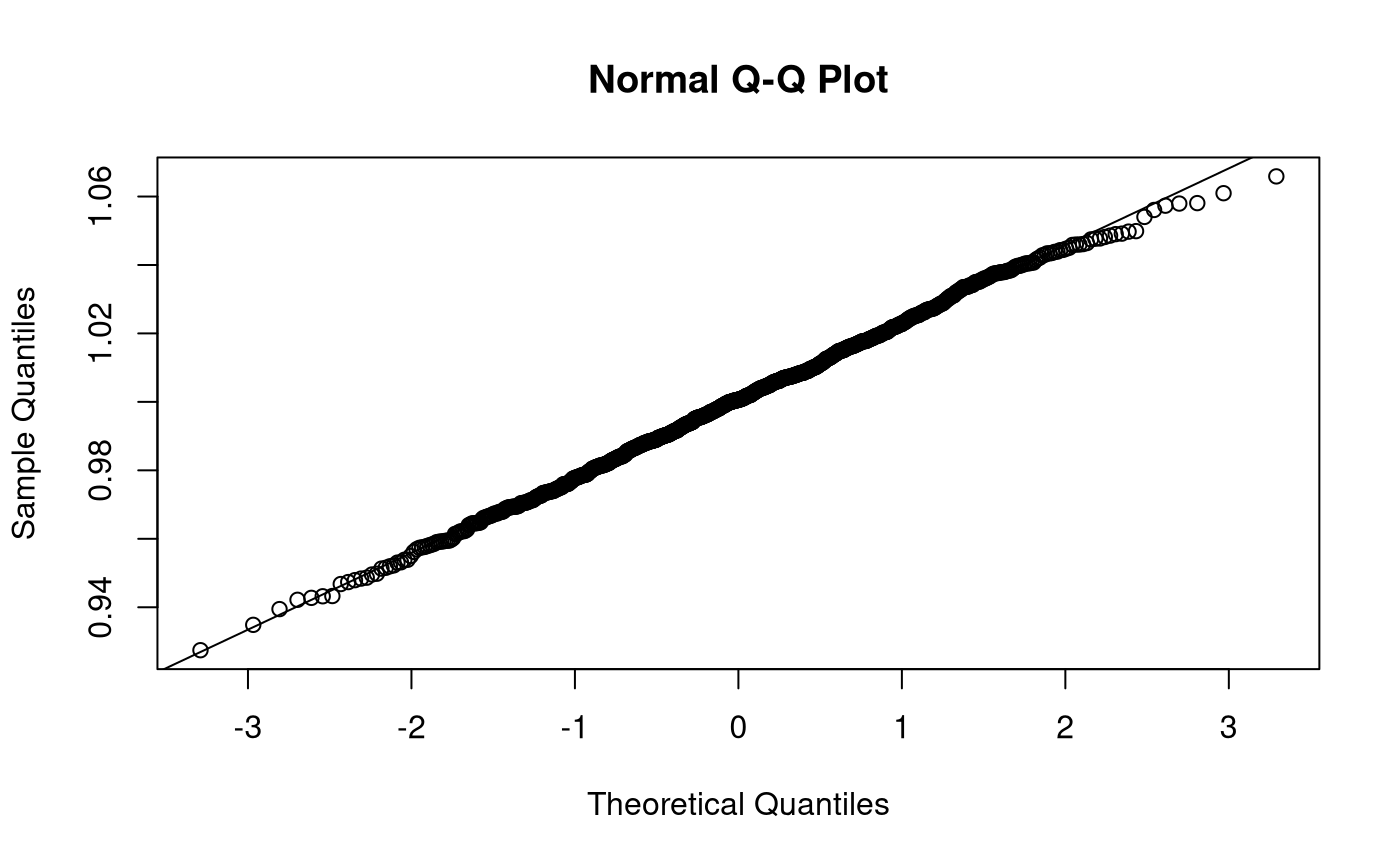

Monte Carlo Simulation

| Variable | Description | Notation | Values | Mean of estimates | Variance of estimates | Standard deviation of estimates |

|---|---|---|---|---|---|---|

mu |

Population mean. | \(\mu\) | 0 | 0.0007422 | 0.0009953 | 0.0315482 |

sigma2 |

Population variance. | \(\sigma^2\) | 1 | 1.0014328 | 0.0020260 | 0.0450106 |

sigma |

Population standard deviation. | \(\sigma\) | 1 | 1.0004634 | 0.0005063 | 0.0225006 |

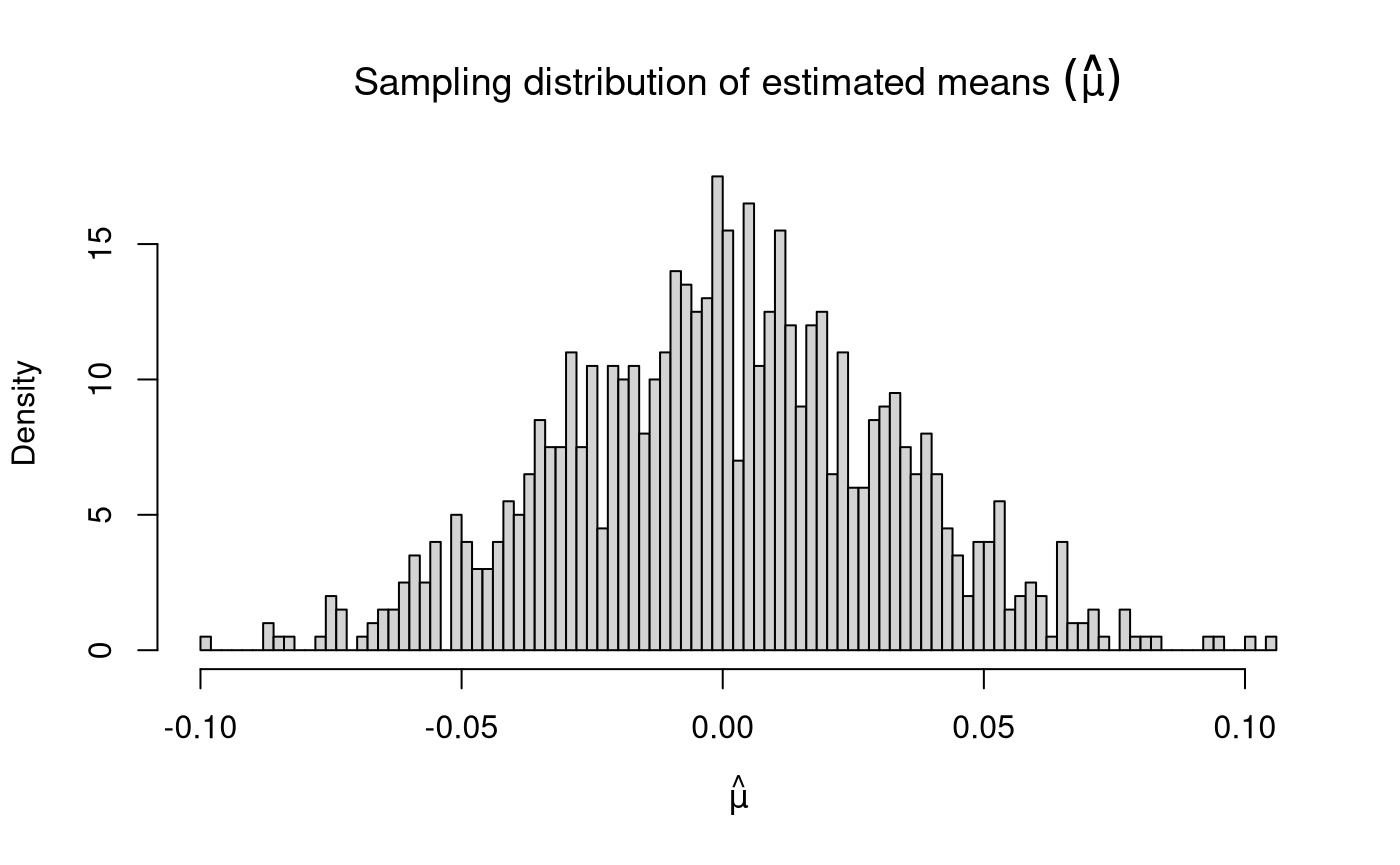

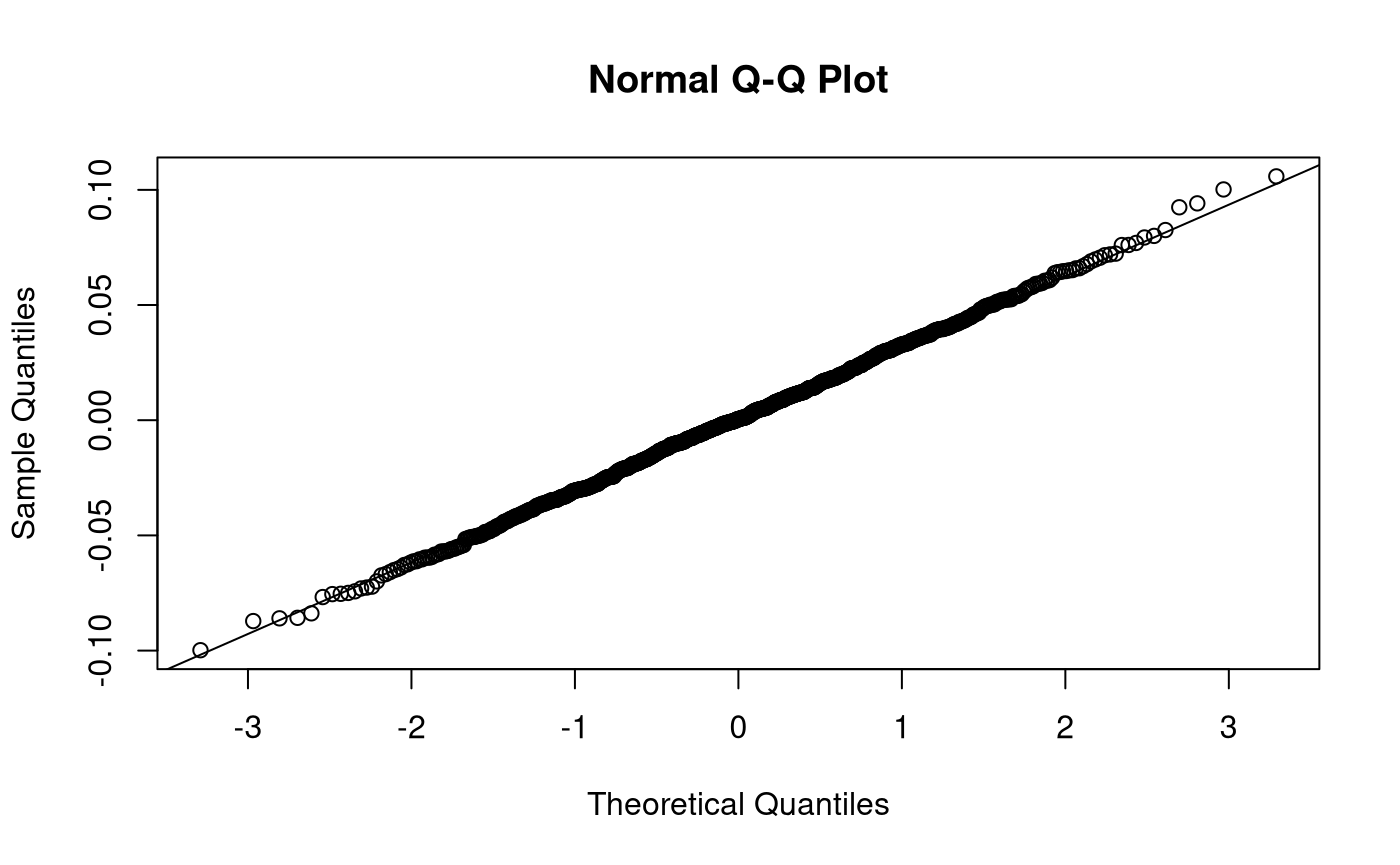

Sampling Distribution of Sample Means

\[\begin{equation} \hat{\mu} \sim \mathcal{N} \left( \mu, \frac{\sigma^2}{n} \right) %(\#eq:dist-sampling-dist-mean) \end{equation}\]

\[\begin{equation} \mathbb{E} \left[ \hat{\mu} \right] = \mu %(\#eq:dist-sampling-dist-mean-expected-value) \end{equation}\]

\[\begin{equation} \mathrm{Var} \left( \hat{\mu} \right) = \frac{\sigma^2}{n} %(\#eq:dist-sampling-dist-mean-var) \end{equation}\]

\[\begin{equation} \mathrm{se} \left( \hat{\mu} \right) = \frac{\sigma}{\sqrt{n}} %(\#eq:dist-sampling-dist-mean-se) \end{equation}\]

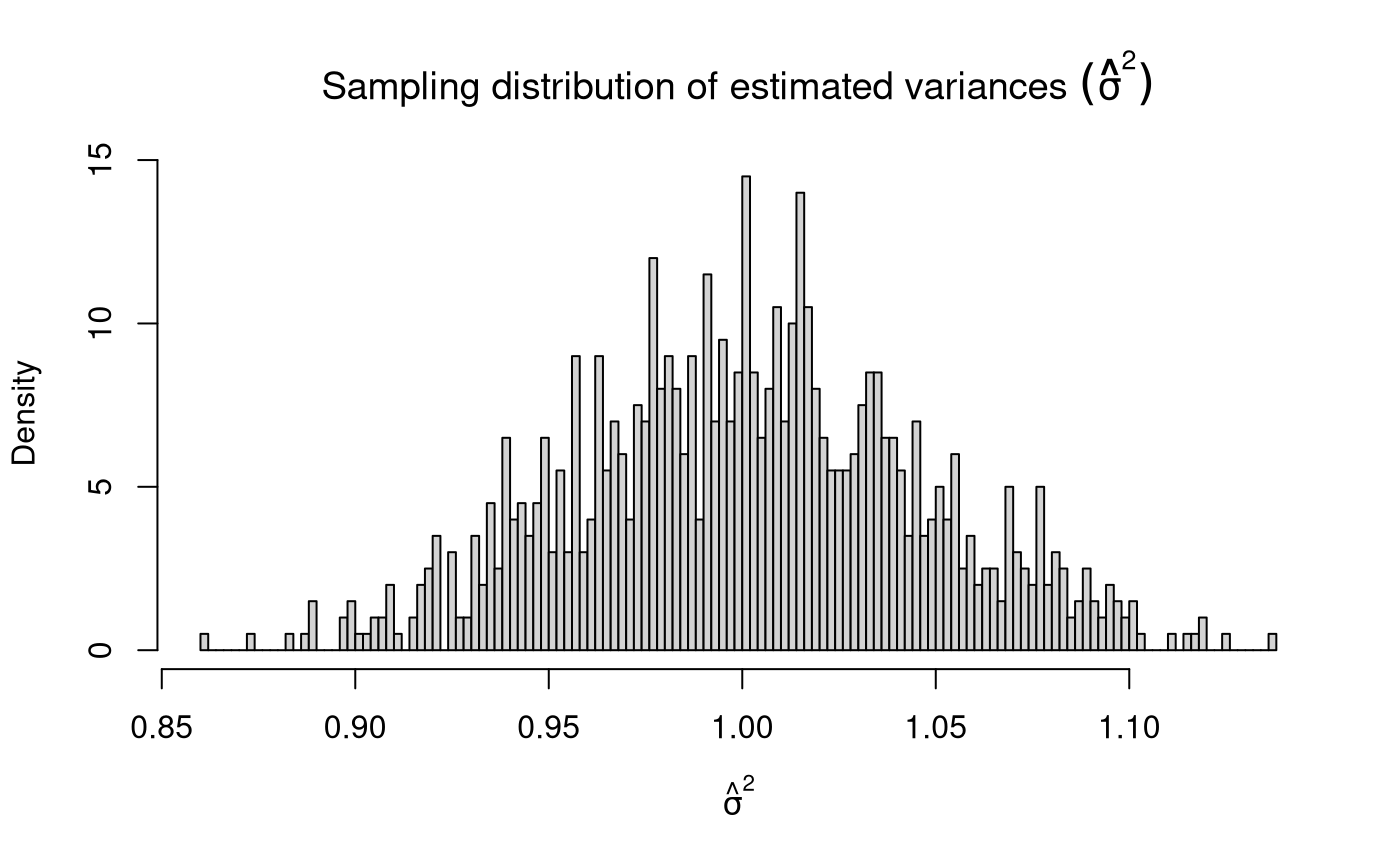

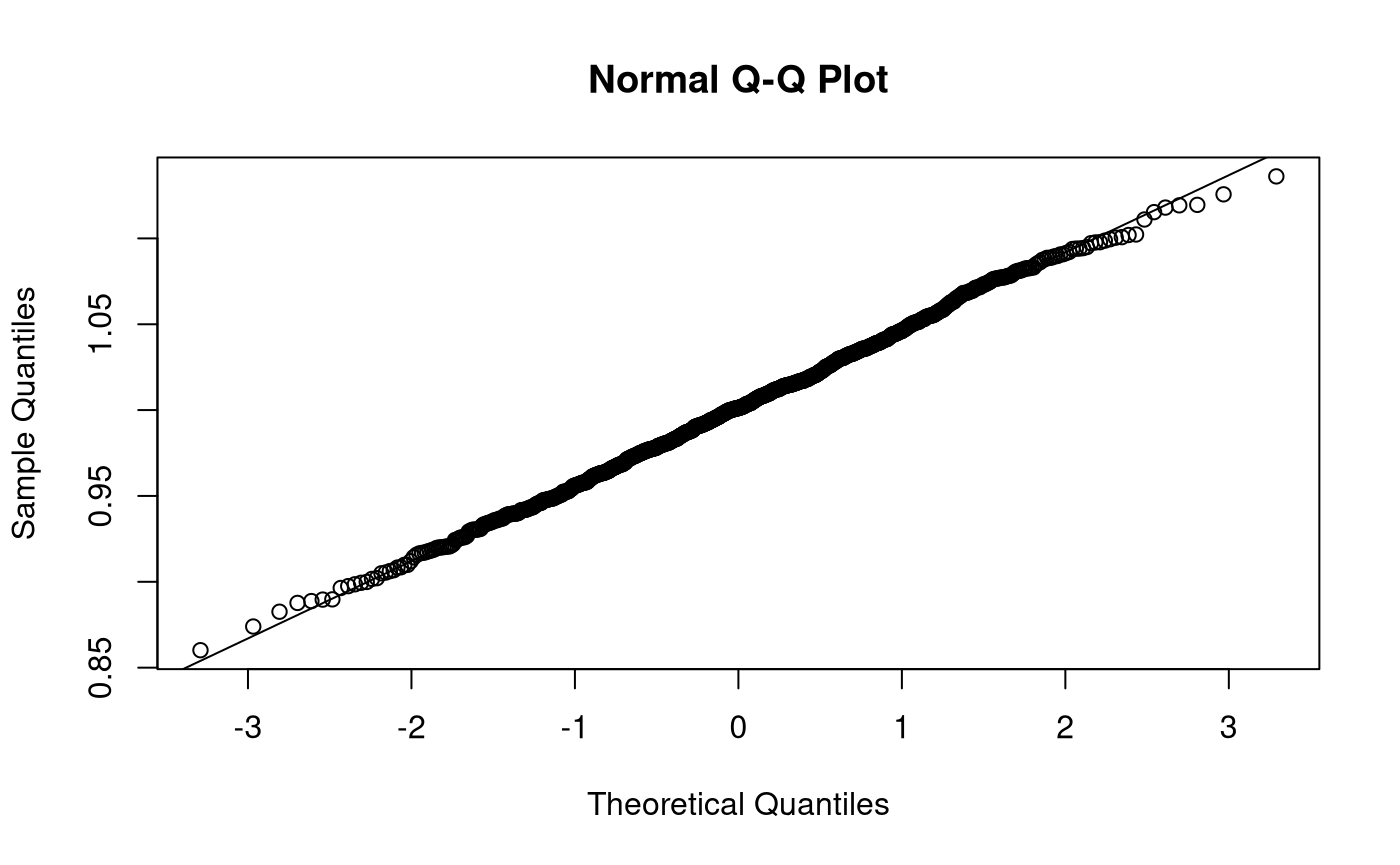

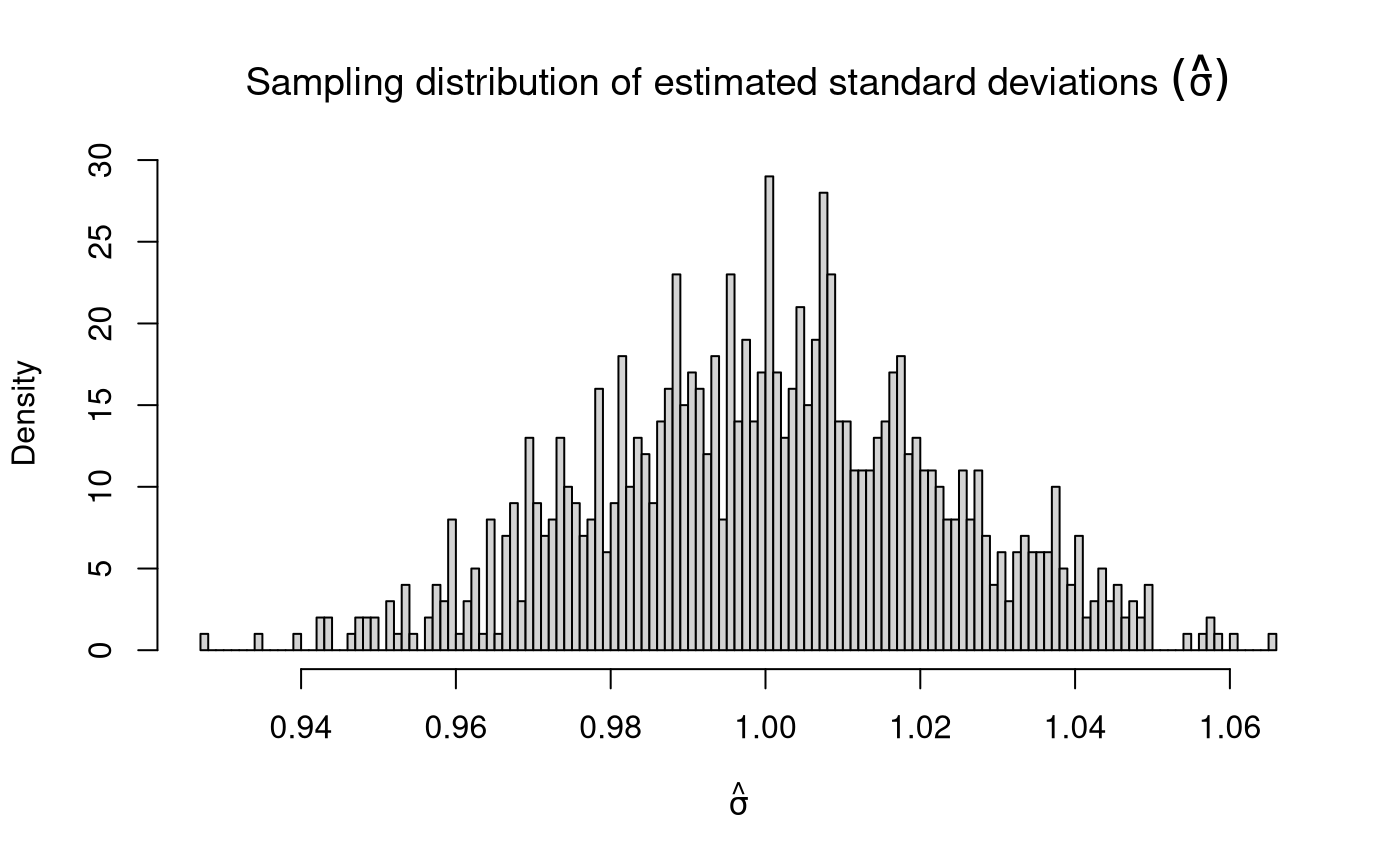

Sampling Distribution of Sample Variances

\[\begin{equation} \hat{\sigma}^2 \sim \chi^2 \left( k = n - 1 \right), \quad \text{when} \quad X \sim \left( \mu, \sigma^2 \right) %(\#eq:dist-sampling-dist-var) \end{equation}\]

\[\begin{equation} \mathbb{E} \left[ \hat{\sigma}^2 \right] = \sigma^2 %(\#eq:dist-sampling-dist-var-expected-value) \end{equation}\]

\[\begin{equation} \mathrm{Var} \left( \hat{\sigma}^2 \right) = \frac{ \left( n - 1 \right) \hat{\sigma}^2 }{ \sigma^2 }, \quad \text{where} \quad X \sim \mathcal{N} \left( \mu, \sigma^2 \right) %(\#eq:dist-sampling-dist-var-var) \end{equation}\]

When \(X \sim \mathcal{N} \left( \mu, \sigma^2 \right)\), \(\mathrm{Var} \left( \hat{\sigma}^2 \right) \sim \chi^2 \left( k = n - 1 \right)\). As \(k\) tends to infinity, the distribution of \(\mathrm{Var} \left( \hat{\sigma}^2 \right)\) converges to normality. When the \(X\) is not normally distributed, the variance of the sampling distribution of the sample variances takes on a slightly different functional form. Increase in sample size, however large it may be, does not help in approximating the normal distribution.

test_that("expected values (means) of muhat, sigma2hat, sigmahat", { expect_equivalent( round( means, digits = 0 ), c( mu, sigma2, sigma ) ) })